Streaming Audio and Video

Streaming audio and video have transformed the way we consume media, allowing us to access high-quality sound and visuals over the internet. This guide explores the fundamentals of digital audio and video, their compression techniques, and how they are transmitted effectively.

What is Digital Audio?

Digital audio refers to the digital representation of sound waves, enabling the recreation of audio signals. When an acoustic wave enters the ear, it vibrates the eardrum, sending nerve impulses to the brain, which we perceive as sound. Similarly, microphones convert sound waves into electrical signals, which can then be digitized.

The Human Ear and Sound Perception

The human ear can detect frequencies ranging from 20 Hz to 20,000 Hz. Loudness is measured in decibels (dB), with 0 dB representing the threshold of hearing. For example, an ordinary conversation is around 50 dB, while sounds above 120 dB can cause pain. Notably, the dynamic range of human hearing exceeds one million, making our ears highly sensitive to sound variations.

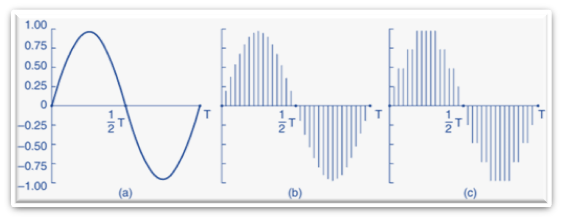

Converting Sound to Digital Format

To convert audio waves into digital form, an Analog-to-Digital Converter (ADC) samples the sound wave at regular intervals. According to the Nyquist theorem, to accurately capture a sound wave, it must be sampled at least twice the highest frequency present. For instance, a sampling rate of 44,100 samples per second is used for audio CDs, allowing for the capture of frequencies up to 22,050 Hz.

Digital Audio Formats

Digital audio finds applications in various areas, including telephony and music CDs. For instance, pulse code modulation (PCM) is used in telephony, with 8-bit samples taken 8,000 times per second, resulting in a data rate of 64,000 bps. In contrast, audio CDs use 16-bit samples at 44,100 samples per second, leading to a data rate of 1.411 Mbps for stereo sound.

Audio Compression Techniques

To reduce bandwidth and storage requirements, audio files are often compressed. Compression algorithms fall into two categories: lossy and lossless. Lossy compression reduces file size by removing some audio data, while lossless compression retains all original data.

Popular Audio Compression Formats

1.MP3 (MPEG Audio Layer III): This widely used lossy compression format significantly reduces file size while maintaining acceptable sound quality.

2.AAC (Advanced Audio Coding): As the successor to MP3, AAC offers better sound quality at similar bit rates and is commonly used in MP4 files.

How Compression Works

Compression algorithms exploit the limitations of human hearing. For example, perceptual coding takes advantage of frequency masking, where louder sounds can mask softer sounds. By omitting inaudible frequencies, compression algorithms can reduce file size without significantly affecting perceived audio quality.

Digital Video: The Basics

Digital video consists of a sequence of frames, each made up of pixels. The quality of video is determined by the number of pixels and the color depth (bits per pixel). For instance, standard color video uses 24 bits per pixel, allowing for approximately 16 million colors.

Frame Rates and Display Technologies

Common frame rates for video include:

→ 24 frames per second (fps): Standard for film.

→ 30 fps: Common for television broadcasts.

→ 60 fps: Used for high-definition video and gaming.

Video can be displayed in various resolutions, such as:

→ 320 x 240 pixels: Low resolution.

→ 640 x 480 pixels: Standard resolution.

→ 1280 x 720 pixels: High-definition (HD) resolution

Video Compression Techniques

Given the high data rates required for uncompressed video, compression is essential for streaming over the internet. For example, a standard-quality video at 640 x 480 pixels and 30 fps can exceed 200 Mbps, which is impractical for most users.

MPEG Compression

MPEG (Moving Picture Experts Group) is a widely used standard for video compression. It compresses video by encoding each frame using techniques similar to JPEG for still images, while also removing redundancy between frames.

Key Features of MPEG

→ Interframe Compression: Reduces data by only storing changes between frames rather than complete frames.

→ Temporal Redundancy: Takes advantage of similarities between consecutive frames to minimize data.

Conclusion

Streaming audio and video have revolutionized media consumption, enabling users to access high-quality content with minimal delay. Understanding the principles of digital audio and video, along with their compression techniques, is essential for navigating the modern multimedia landscape. As technology continues to evolve, the methods used for streaming will become even more efficient, enhancing the user experience and expanding the possibilities for content delivery. By leveraging advanced compression algorithms and understanding the intricacies of digital formats, we can ensure that audio and video streaming remains accessible and enjoyable for everyone.