Quality of Service (QoS) in Networking

Quality of Service (QoS) is a critical aspect of networking that ensures applications receive the performance they require, particularly in environments with varying traffic loads. As multimedia applications become increasingly prevalent, the demand for reliable network performance—characterized by minimum throughput and maximum latency—has intensified. This article delves into the key components of QoS, its importance, and the techniques used to achieve it.

What is Quality of Service (QoS)?

Quality of Service refers to the set of technologies and techniques that manage network resources to provide a predictable level of performance for specific applications or services. QoS is essential for applications that require consistent performance, such as video conferencing, online gaming, and VoIP (Voice over Internet Protocol).

Key Parameters of QoS

QoS can be characterized by four primary parameters:

1.Bandwidth: The amount of data that can be transmitted over a network in a given time period. Different applications have varying bandwidth requirements.

2.Delay: The time it takes for a packet to travel from the source to the destination. Real-time applications, like telephony, require low delay to maintain conversation quality.

3.Jitter: The variation in packet arrival times. Applications like video streaming are sensitive to jitter, as inconsistent delivery can disrupt playback.

4.Loss: The percentage of packets that are lost during transmission. While some applications can tolerate a certain level of packet loss, others, like file transfers, require reliable delivery.

The Need for QoS

In a world where network traffic is often unpredictable and bursty, QoS mechanisms are essential for ensuring that critical applications receive the necessary resources. Overprovisioning—a method of building networks with excess capacity—can provide good QoS but is often cost-prohibitive. Instead, QoS techniques allow networks to manage resources more efficiently, ensuring that performance guarantees are met even during traffic spikes.

Techniques for Implementing QoS

To effectively implement QoS, several techniques are employed at the network and transport layers. Two prominent approaches are Integrated Services and Differentiated Services.

Integrated Services (IntServ)

Integrated Services is a QoS architecture that provides end-to-end service guarantees for individual flows. It uses the Resource Reservation Protocol (RSVP) to establish and maintain resource reservations across the network. This approach is particularly useful for applications that require strict performance guarantees, such as real-time video streaming.

Differentiated Services (DiffServ)

Differentiated Services offers a more scalable approach to QoS by classifying packets into different service classes. Each class receives a specific level of treatment at each router, allowing for efficient resource allocation without the need for per-flow state management. This method is particularly beneficial in environments with a high volume of traffic, as it simplifies the management of QoS.

Traffic Shaping and Policing

To ensure that traffic conforms to the agreed-upon QoS parameters, techniques such as traffic shaping and traffic policing are employed.

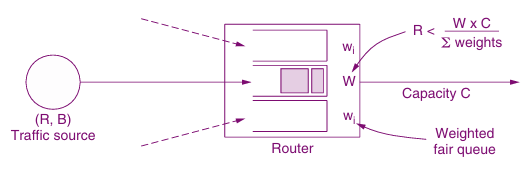

→ Traffic Shaping: This technique regulates the flow of data entering the network, smoothing out bursts and ensuring that traffic adheres to specified limits. Algorithms like the Token Bucket and Leaky Bucket are commonly used for this purpose.

→ Traffic Policing: This involves monitoring traffic flows to ensure compliance with QoS agreements. Packets that exceed the agreed-upon limits may be dropped or marked with lower priority.

Packet Scheduling

Packet scheduling algorithms play a crucial role in managing how packets are transmitted through routers. Common scheduling techniques include:

→ First-In, First-Out (FIFO): This simple method processes packets in the order they arrive but can lead to performance issues when multiple flows compete for resources.

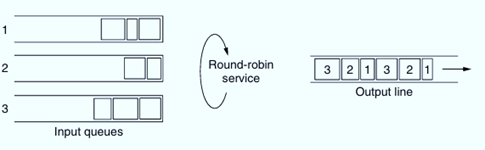

→ Fair Queueing: This algorithm allocates bandwidth fairly among competing flows, ensuring that no single flow monopolizes the network resources.

→ Weighted Fair Queueing (WFQ): An enhancement of fair queueing, WFQ allows for prioritization of certain flows, ensuring that high-priority traffic receives the necessary bandwidth.

Admission Control

Admission control is the process of determining whether a new flow can be accommodated within the existing network resources without violating QoS guarantees. This involves evaluating the current capacity and commitments to other flows, ensuring that the network can meet the performance requirements of the new flow.

Conclusion

Quality of Service is an essential component of modern networking, particularly as the demand for reliable and high-performance applications continues to grow. By understanding the key parameters of QoS and the techniques used to implement it, network administrators can ensure that their networks meet the diverse needs of users and applications. Whether through Integrated Services or Differentiated Services, effective QoS management is crucial for maintaining a seamless and efficient network experience.